Unedited Frame

We present Interactive Neural Video Editing (INVE), a real-time video editing solution, which can assist the video editing process by consistently propagating sparse frame edits to the entire video clip.

Our method is inspired by the recent work Layered Neural Atlas (LNA). LNA, however, suffers from two major drawbacks: (1) the method is too slow for interactive editing, and (2) it offers insufficient support for some editing use cases, including direct frame editing and rigid texture tracking. To address these challenges we leverage and adopt highly efficient network architectures, powered by hash-grids encoding, to substantially improve processing speed. In addition, we learn bi-directional functions between image-atlas and and introduce vectorized editing, which collectively enable a much greater variety of edits in both the atlas and the frames directly.

Compared to LNA, INVE reduces the learning and inferencing time by a factor of 5, and supports various video editing operations that LNA cannot. We showcase the superiority of INVE over LNA in interactive video editing through a comprehensive quantitative and qualitative analysis, highlighting its numerous advantages and improved performance.

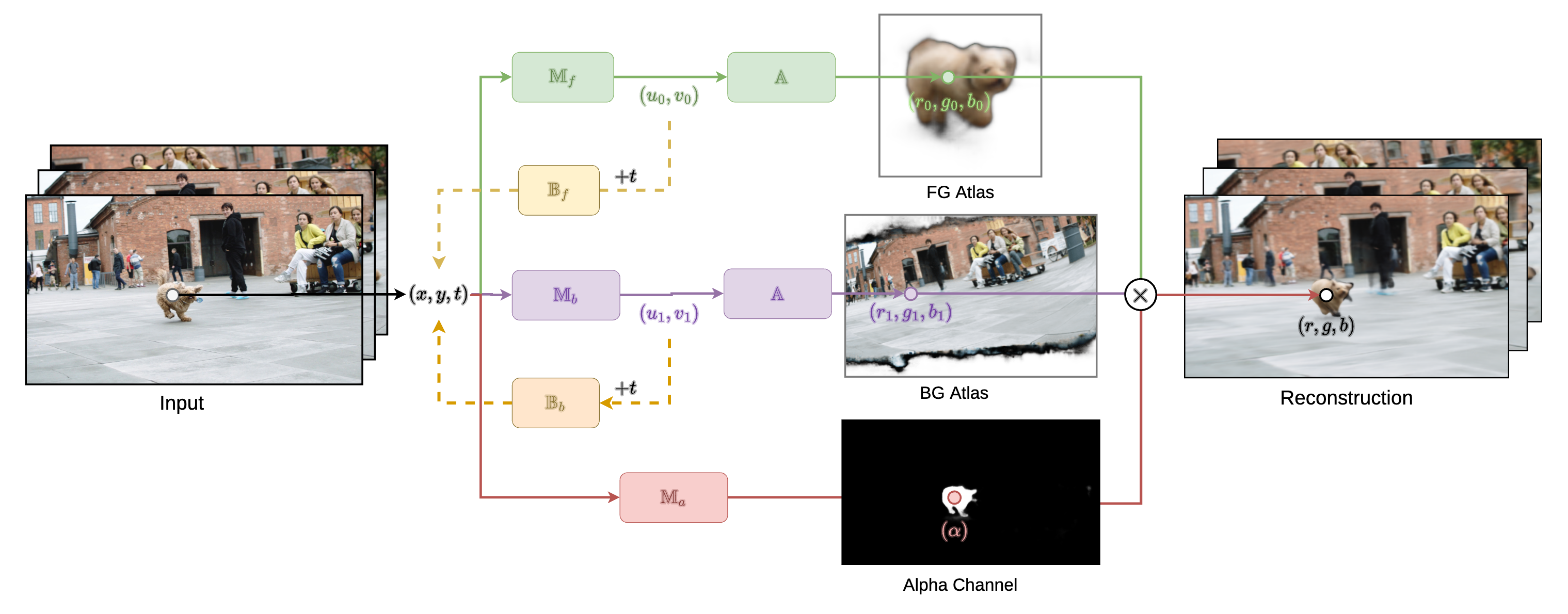

Forward mapping pipeline (solid lines): Each video pixel location is fed into two mapping networks to predict (u, v) coordinates on each atlas. Then these coordinates are fed into the atlas network A to predict the RGB color on that atlas. We use the opacity value α predicted by the alpha network Ma to compose the reconstructed color at location (x, y, t). Our backward mapping pipeline (dotted lines) maps atlas coordinates to video coordinates, it takes an (u, v) coordinate, as well as the target frame index t as input, and predicts the pixel location (x, y, t). With the forward and backward pipelines combined, we can achieve long-range point tracking on videos

Our layered editing pipeline supports multiple types of edits: 1) Sketch Edits, where users can sketch scribbles using the brush tool; 2) Local Adjustments, users can apply local adjustments (brightness, saturation, hue) to a specific region in the scene; 3) Texture Edits, users can import external graphics that tracks and deforms with the moving object. We showcase some videos edited using our pipeline, our method can propagate various types of edits consistently to all frames in a video.